Power black out detection

Context

Power blackouts have a major impact on the supply and production chain, damage assets and wasted product, and even cause accidents.

Being able to anticipate blackouts can significantly reduce their impact.

In a nutshell, what Quasar delivered

- Setup of complete electrical waveform data capture in less than a week

- Powerful and flexible analysis as well as short feedback loop enable finding patterns of precursors faster

- Future proof solution thanks to built-in scalability

Challenge

It is possible to anticipate power blackouts by looking for weak signals that prelude significant events. Finding these week signals requires capturing data at the highest resolution possible and performing spectral analysis on the data.

The most significant part of the data consists of the complete tri-phasic electrical waveform data. Resolution can go up to 20 kHz: 20,000 points per second per waveform. It means that a multi-month history is typically at the petabyte scale.

A data lake is a typical solution as storing data in files is simple. Data warehousing solutions are just not cost-effective for these data volumes.

However, a data lake cannot be queryied. This means that once the data is captured, computations (for example, FFT) need to be done ad hoc, adding to latency and cost.

The solution

Quasar can capture the complete waveform data at the highest resolution directly from the MQTT channel.

Each waveform is stored in a separate table, organized in a timeseries. Tagging allows for multiple tables to be queried at the same time.

Feature extraction is done by Quasar and does not require a dedicated program. Quasar has built aggregation functions that can work directly on the data, leveraging the SIMD capabilities of modern processors. For example, Quasar can transform alternate current in direct current in real-time.

Data scientists can get the data exactly how they need it when they need it without going through any additional steps. This dramatically increases research speed.

For monitoring purposes, electrical data can be visualized in real time by leveraging Grafana and the Quasar Grafana connector.

Quasar is used both for live data and historical data, making model deployments smooth.

Benefits

- Significantly accelerate research speed to find weak signals indicating power blackout, preventing millions of dollars of damage and waste

- Building and deploying models is frictionless, leading to a quick feedback loop to improve the accuracy and thus the gains

- Limitless historical capabilities, giving potentially unlimited accuracy

- Outstanding TCO: extracting features does not require writing custom programs and uses less CPU. Up to 20X less storage space is needed compared to a typical data warehousing solution and up to 1,000X faster feature extraction compared to data lakes

Manufacturing Process Historian

Context

Manufacturing plants use historians, such as GE Historian, to display process data, such as energy consumption, on dashboards or inject them into analytical tools to create models.

These models help to analyze the various operating conditions, for example, find patterns of energy consumption correlated with known incidents of product defects.

In a nutshell, what Quasar delivered

- Completely removed all limits imposed by historians in terms of history depth, data volume, and query complexity

- Virtually unlimited history

- No change in the reporting tool used, Quasar replaces the backend transparently

- Storage footprint divided by 20

- Future proof solution thanks to built-in scalability

Challenge

Historians struggle quickly when the depth of history rises. To build an accurate model, one may need to pull a year of more than data; these represent data volumes historians cannot manage, even for smaller plants.

That means that users need to choose between history depth and sampling accuracy to stay within the constraints of the historian.

That makes it very difficult for data scientists to do a detailed analysis of energy consumption, resulting in inaccurate or incorrect models.

The solution

Quasar is connected to the historian with its high-speed ingestion API to capture all the data as it comes, without any loss of quality or precision.

Quasar will use its unique algorithm to compress the data faster than it arrives and use its micro-indexes to reduce future lookup and feature extraction time. This results in significantly reduced disk usage without impacting the queryability of the data.

Each sensor is stored in a dedicated table storing timeseries data. Tables can be tagged at will, enabling a flexing querying mechanism based on those tags. For example, obtaining all the sensors of an asset or in a specific area of the plant.

Once Quasar collects all the information, it will serve all queries at the highest resolution possible.

Energy data, for example, can be shown at the highest resolution, something it was not possible to do with a historian without compromising dynamic response.

Benefits

- Build accurate models to improve process quality

- Complete data precision to improve the accuracy of the models

- Circumvent historian limitations without replacing them, minimizing IT infrastructure disruption while enabling next-generation AI

- Outstanding TCO: extracting features does not require writing custom programs and uses less CPU. Up to 20X less storage space is needed compared to a typical data warehousing solution and up to 1,000X faster feature extraction compared to data lakes

Manufacturing facility monitoring

Context

In any manufacturing facility, breakdowns are expensive and disruptive. They can result in wasted material and extensive damage to the assets.

It is possible to anticipate these breakdowns, perform root cause analysis, and create a virtuous circle to reduce their occurrence with complete monitoring.

In a nutshell, what Quasar delivered

- From hours to seconds to produce a report

- Virtually unlimited history

- No change in the reporting tool used, Quasar replaces the backend transparently

- Storage footprint divided by 20

- Future proof solution thanks to built-in scalability

Challenge

To provide insights about plant operations, one needs to collect, analyze, and visualize numerous metrics.

These metrics allow having a clear understanding of breakdowns, the meantime to respond and repair the breakdowns, and ultimately anticipate them.

However, a plant with many assets generates tens of millions of records per second. This volume exceeds the capabilities of a typical relational database, a default choice for many of these setups.

As volume increases, updates are longer and longer, sometimes taking hours.

When connecting the data to, for example, Power BI, queries are sluggish, and the data lag prevents the monitoring from being effective.

The solution

Quasar can collect all events as they come and store them efficiently. The collection is direct and does not require any third-party software.

Quasar has no practical limit to how many records per second can be inserted and can thus handle hundreds of millions of updates per second at low latency.

Quasar will use its unique algorithm to compress the data faster than it arrives and use its micro-indexes to reduce future lookup and feature extraction time. This results in significantly reduced disk usage without impacting the queryability of the data.

Each sensor is stored in a dedicated table storing timeseries data. Tables can be tagged at will, enabling a flexing querying mechanism based on those tags. For example, obtaining all the sensors of an asset or in a specific area of the plant.

Microsoft Power BI, or any similar tool, can connect to Quasar directly using ODBC, and queries are instant, delivering sub-minute end-to-end data freshness and enabling operational intelligence.

Benefits

- Manufacturing Facility can achieve operation excellence by having exhaustive access to all the data, resulting in significantly lower operational costs

- Exhaustive, low-latency, monitoring of all assets at the highest precision possible, increasing accuracy and thus potential gains

- Building and deploying models is frictionless, leading to a quick feedback loop to improve the accuracy and thus the gains

- Connect tools such as Microsoft Power BI directly and do data science without writing code, reducing the strain on dedicated teams

- Outstanding TCO: extracting features does not require writing custom programs and uses less CPU. Up to 20X less storage space is needed compared to a typical data warehousing solution and up to 1,000X faster feature extraction compared to data lakes

Asset health: doctor blade

Context

Doctor blades are used in printing to remove the excess ink from a cylinder to create a uniform layer of ink.

If the blade is not sharpened correctly, wrongly adjusted, or is damaged, this can result in millions of dollars of asset damage.

In a nutshell, what Quasar delivered

- Greatly increased accuracy of prediction and models by capturing the full waveform data and performing spectral analysis.

- A shorter model to production loop that empowers data analystis and reliability engineers

- 1,000X faster queries compared to a datawarehousing solution while using less cloud resources

- Storage footprint divided by 10

- Future proof solution thanks to built-in scalability

Challenge

The blade is changed or adjusted based on a technician's hunch and experience or current practice.

However, collecting the vibration data coming out of the sensors makes it possible to identify common patterns present before problems happen.

The goal is to have a dashboard with precise information regarding the imminence of a problem and its type. In addition, it is possible with this approach to find the optimal time to change the doctor blade, preventing asset damage and optimizing parts inventory.

However, having this level of accuracy requires working on the entire waveform of the vibration data and performing a frequency analysis. Complete waveform data is usually several thousands of points per second per sensor, resulting in massive data sets.

The other challenge is that sometimes problems are identified just minutes before they happen, which means it's imperative to have an end-to-end data latency update below the minute.

That update latency and write volume disqualify data warehousing technology immediately.

An approach that solves the write volume problem is to store the data in files and then have ad-hoc programs perform the feature extraction. This, however, means that each analysis may have a high CPU time cost, and it requires writing new programs if the feature extraction needs to change.

Finally, these programs must be highly efficient to not add to the data latency. If it takes 15 minutes to extract the feature, the information may come too late to take action.

The solution

Using Quasar, firms can capture the complete waveform data using dedicated connectors. The capture can happen at the edge or via MQTT.

Quasar will use its unique algorithm to compress the data faster than it arrives and use its micro-indexes to reduce future lookup and feature extraction time. This results in significantly reduced disk usage without impacting the queryability of the data.

Each sensor is stored in a dedicated table storing timeseries data. Tables can be tagged at will, enabling a flexing querying mechanism based on those tags. For example, obtaining all the sensors of an asset or all the sensors of a mill.

Quasar has built-in feature extraction capabilities that leverage the capabilities of the SIMD capabilities of the processor. It can, in real-time, perform complex statistical analysis, pivot the data, do FFT, and much more.

This means that feature extraction does not require ad-hoc programs and can be tailored by writing a simple SQL query.

Quasar can keep a deep history, meaning that the same system is used for operational reporting and building the strategy. This significantly reduces the time between devising a new approach and deploying it in production.

Benefits

- Firms can perform advanced data analysis to protect their asset health and optimize their parts inventory, increasing competitiveness

- Capture the entire waveform without any limitation in history depth, increasing accuracy and thus potential gains

- Devising and deploying new strategies is extremely fast since Quasar contains both the history and the live data, increasing productivity of rare data scientists resources

- Extracting feature does not require writing custom programs, increasing flexibility

- Outstanding TCO: extracting features does not require writing custom programs and uses less CPU. Up to 20X less storage space is needed compared to a typical data

warehousing solution and up to 1,000X faster feature extraction compared to data lakes

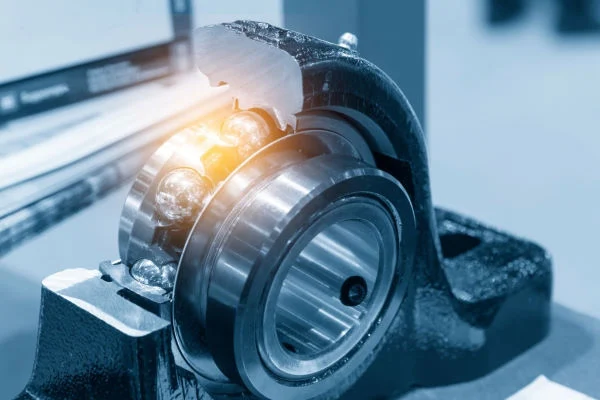

Asset Health : Ball Bearing

Context

The papermaking process uses spherical roller bearings. These bearings require proper lubrification and maintenance; failure to do so can result in millions of dollars in damage to assets.

In a nutshell, what Quasar delivered

- Greatly increased accuracy of prediction and models by capturing the full waveform data and performing spectral analysis.

- A shorter model to production loop that empowers data analysis and reliability engineers

- 1,000X faster queries compared to a data warehousing solution while using less cloud resources

- Storage footprint divided by 10

- Future proof solution thanks to built-in scalability

Challenge

Technicians need to inspect the machine visually and check its behavior to know if the bearing needs to be changed. When an asset breaks down, it can take a very long time for the technician to understand the root cause.

By collecting the vibration data coming out of the sensors, it’s possible to identify typical patterns before a problem happens. The patterns can also help distinguish between the root cause of the problem.

The goal is to have a dashboard with precise information regarding the imminence of a problem and its type. In addition, it is possible with this approach to anticipate wear and tear long enough to schedule a downtime instead of dealing with a forced interruption.

However, having this level of accuracy requires working on the entire waveform of the vibration data and performing a frequency analysis. Complete waveform data is usually several thousands of points per second per sensor, resulting in massive data sets.

The other challenge is that sometimes problems are identified just minutes before they happen, which means it’s imperative to have an end-to-end data latency update below the minute.

That update latency and write volume disqualify data warehousing technology immediately.

An approach that solves the write volume problem is to store the data in files and then have ad-hoc programs perform the feature extraction. This, however, means that each analysis may have a high CPU time cost, and it requires writing new programs if the feature extraction needs to change.

Finally, these programs must be highly efficient to not add to the data latency. If it takes 15 minutes to extract the feature, the information may come too late to take action.

The Solution

Using Quasar, firms can capture the complete waveform data using dedicated connectors. The capture can happen at the edge or via MQTT.

Quasar will use its unique algorithm to compress the data faster than it arrives and use its micro-indexes to reduce future lookup and feature extraction time. This results in significantly reduced disk usage without impacting the query ability of the data.

Each sensor is stored in a dedicated table storing time series data. Tables can be tagged at will, enabling a flexing querying mechanism based on those tags. For example, obtaining all the sensors of an asset or all the sensors of a mill.

Quasar has built-in feature extraction capabilities that leverage the capabilities of the SIMD capabilities of the processor. It can, in real-time, perform complex statistical analysis, pivot the data, do FFT, and much more.

This means that feature extraction does not require ad-hoc programs and can be tailored by writing a simple SQL query.

Benefits

- Firms can perform advanced data analysis to protect their asset health and schedule maintenance, resulting in millions of dollars in savings per year

- Capture the entire waveform without any limitation in history depth, increasing accuracy and thus potential gains

- Devising and deploying new strategies is extremely fast since Quasar contains both the history and the live data, increasing the productivity of rare data scientist resources

- Extracting feature does not require writing custom programs, increasing flexibility

- Outstanding TCO: extracting features does not require writing custom programs and uses less CPU. Up to 20X less storage space is needed compared to a typical data warehousing solution and up to 1,000X faster feature extraction compared to data lakes